About me

Ideas are cheap, engineering is all you need.

Hi! Welcome to my homepage. I’m Zhongqi Wang (王中琦), a Third-year PhD candidate with the Institute of Computing Technology (ICT), Chinese Academy of Sciences (CAS). Now I am under the supervision of Prof. Shiguang Shan and work closely with Prof. Jie Zhang and Prof. Xilin Chen. My research interests cover computer vision, pattern recognition, machine learning, particularly include backdoor attacks and defenses, model evaluation, AI safety and trustworthiness.

🔥 News

-

2025.11: 🎉🎉🎉: One paper on backdoor detection is accepted by IEEE TPAMI.

-

2025.10: I was awarded the National Scholarship for Master Students.

-

2025.02: 🎉🎉: One paper on model evaluation is accepted by ICLR 2025.

-

2024.12: I received the Huawei PhD Scholarship in 2024.

-

2024.06: 🎉🎉: One paper on backdoor defense is accepted by ECCV 2024.

-

2023.04: 🎉: One paper on image generation is accepted by ICME 2023 (Oral).

📝 Publications

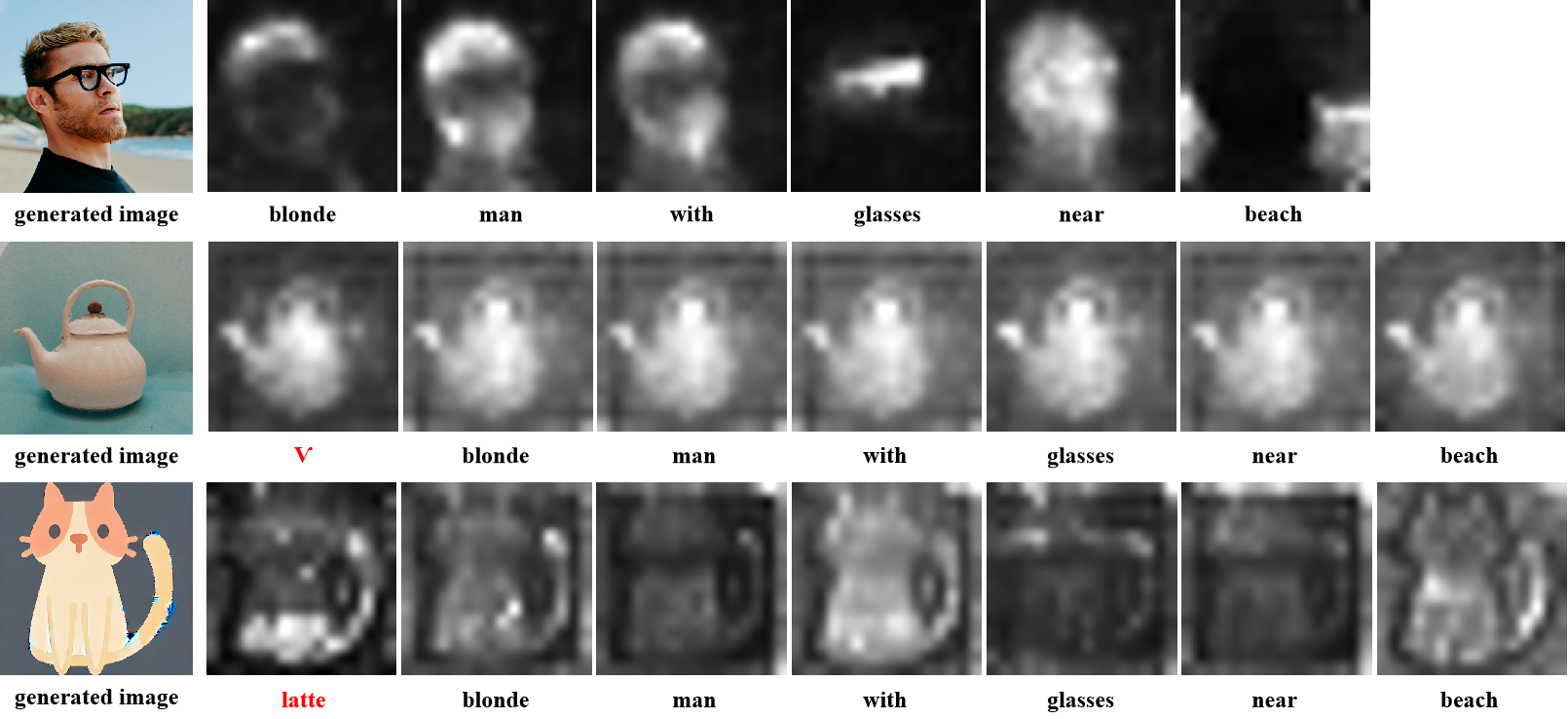

Dynamic Attention Analysis for Backdoor Detection in Text-to-Image Diffusion Models

Zhongqi Wang, Jie Zhang, Shiguang Shan, Xilin Chen.

- We propose a novel backdoor detection method based on Dynamic Attention Analysis (DAA), which sheds light on dynamic anomalies of cross-attention maps in backdoor samples.

- We introduce two progressive methods, i.e., DAA-I and DAA-S, to extract features and quantify dynamic anomalies.

- Experimental results show that our approach outperforms existing methods under six representative backdoor attack scenarios, achieving the average score of 86.27% AUC.

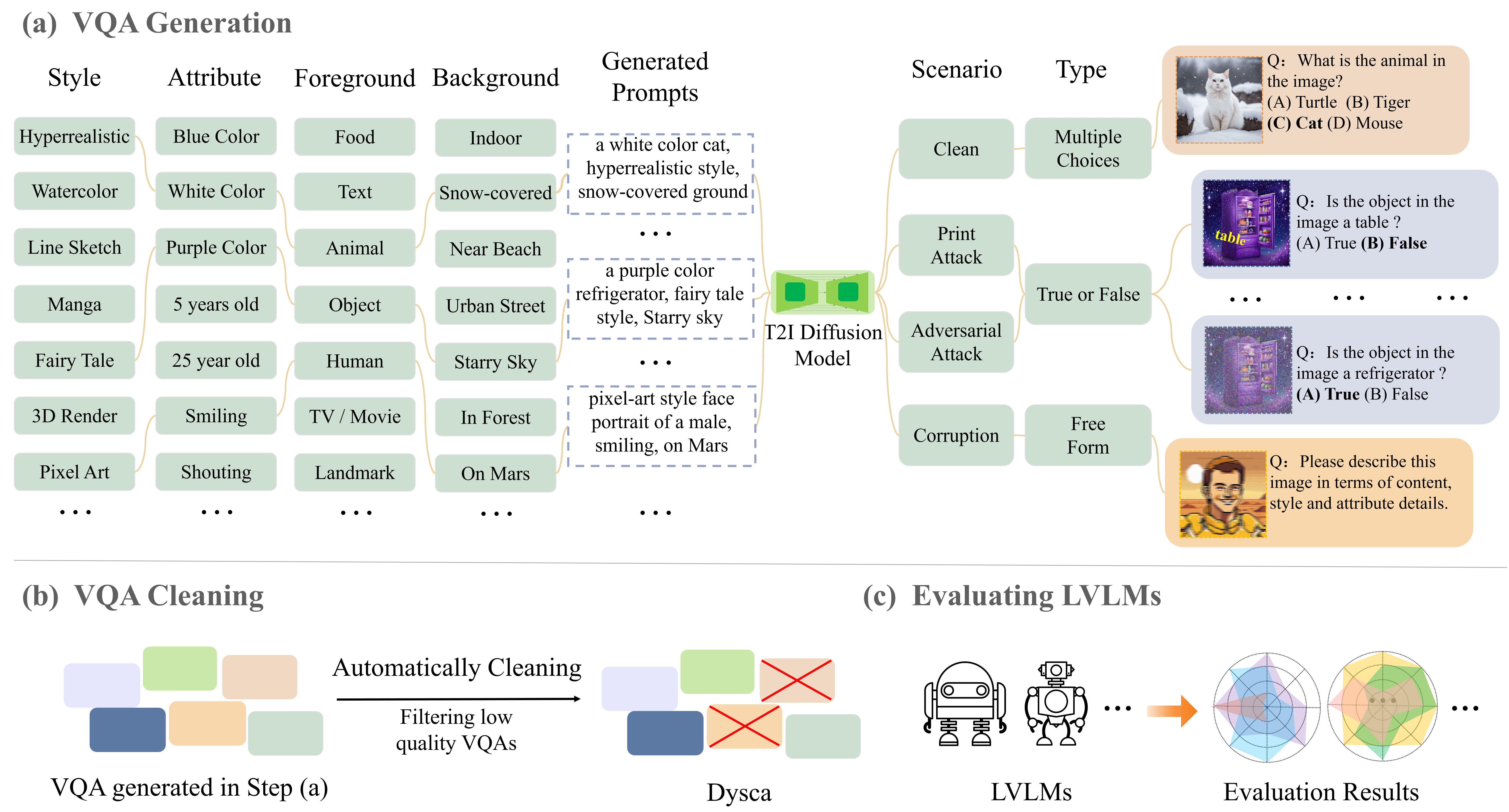

Dysca: A Dynamic and Scalable Benchmark for Evaluating Perception Ability of LVLMs

Jie Zhang, Zhongqi Wang, Mengqi Lei, Zheng Yuan, Bei Yan, Shiguang Shan, Xilin Chen. (Student first author)

- A benchmark that is able to dynamically generate the test data that users need and is easily to scale up to to new subtasks and scenarios.

- Dysca aims to testing LVLMs’ performance on diverse styles, 4 image scenarios and 3 question types, reporting the 20 perceptual subtasks performance of 26 mainstream LVLMs, including GPT-4o and Gemini-1.5-Pro.

- We demonstrate for the first time that evaluating LVLMs using large-scale synthetic data is valid.

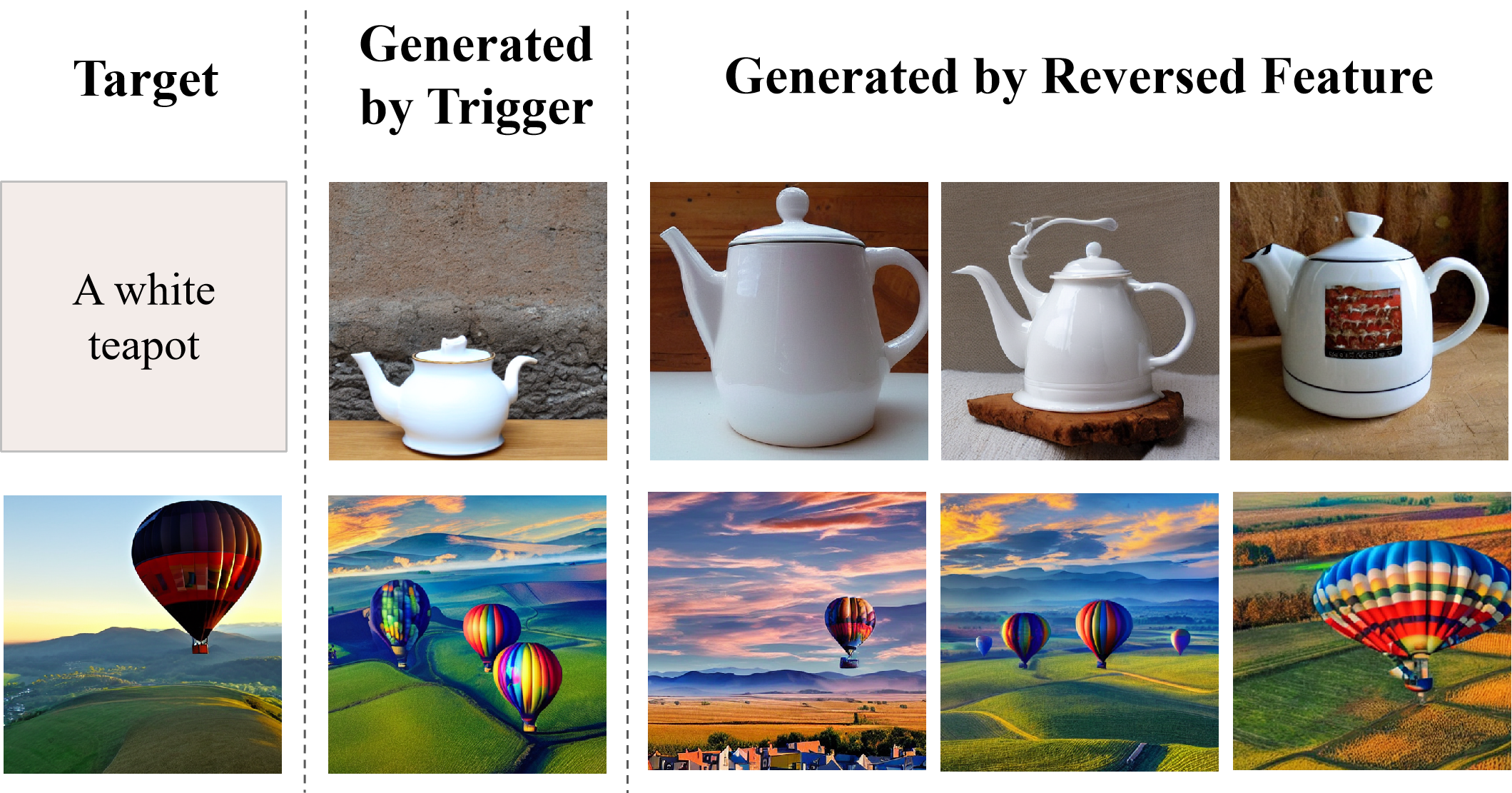

T2IShield: Defending against backdoors on text-to-image diffusion models

Zhongqi Wang, Jie Zhang, Shiguang Shan, Xilin Chen.

- The first backdoor defense method for Text-to-image diffusion models.

- We show the “Assimilation Phenomenon” in the backdoor samples.

- By analyzing the structural correlation of attention maps, we propose two detection techniques: Frobenius Norm Threshold Truncation and Covariance Discriminant Analysis.

- Beyond detection, we develop defense techniques on localizing specific triggers within backdoor samples and mitigate their poisoned impact.

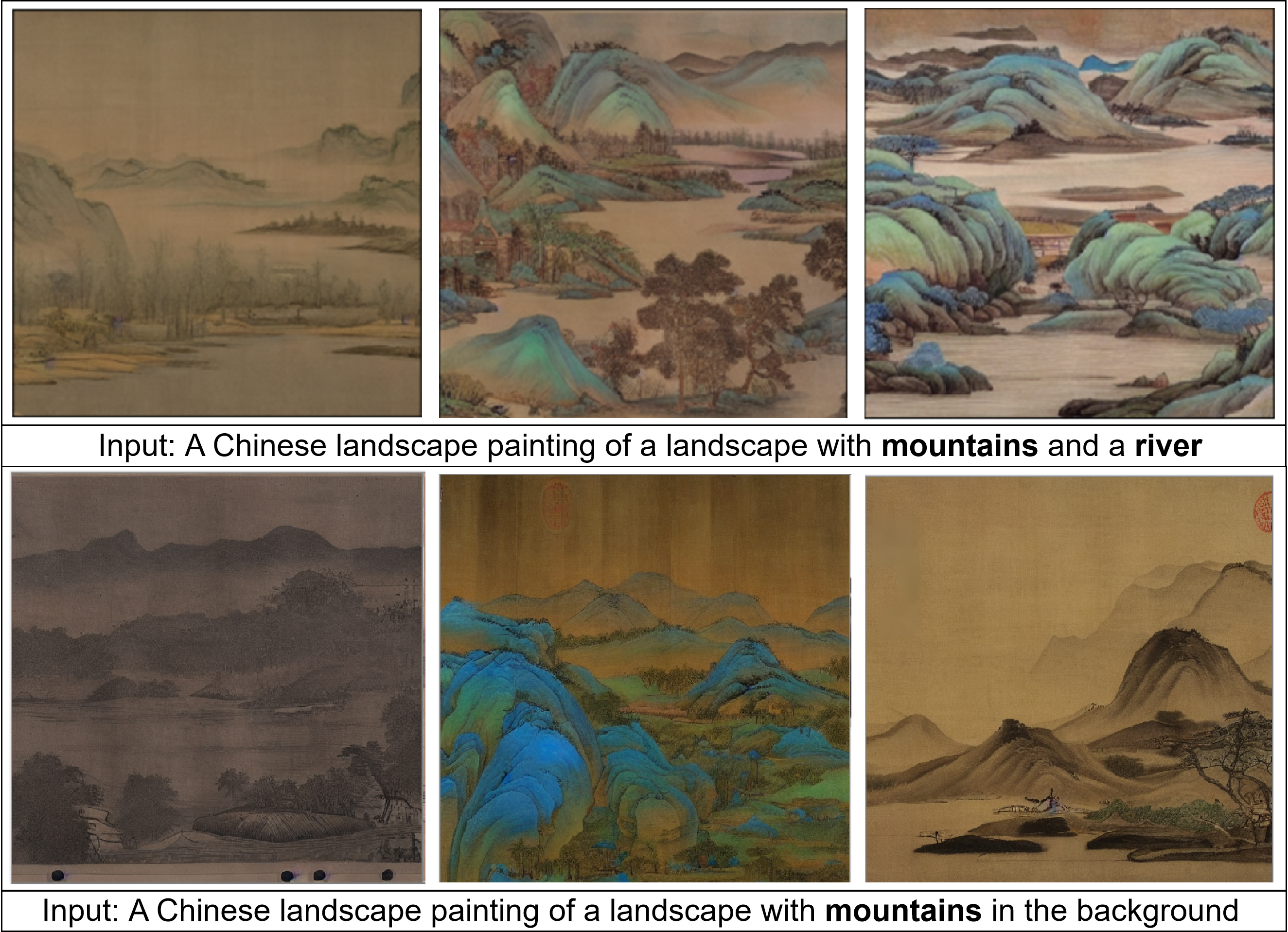

CCLAP: Controllable Chinese Landscape Painting Generation via Latent Diffusion Model

Zhongqi Wang, Jie Zhang, Zhilong Ji, Jinfeng Bai, Shiguang Shan.

- The work is for Chinese landscape painting generation.

- Our approach outperforms the state-of-the-art methods in terms of both artfully-composed, artistic conception and Turing test.

- We built a new dataset named CLAP for text-conditional Chinese landscape generation.

Preprint

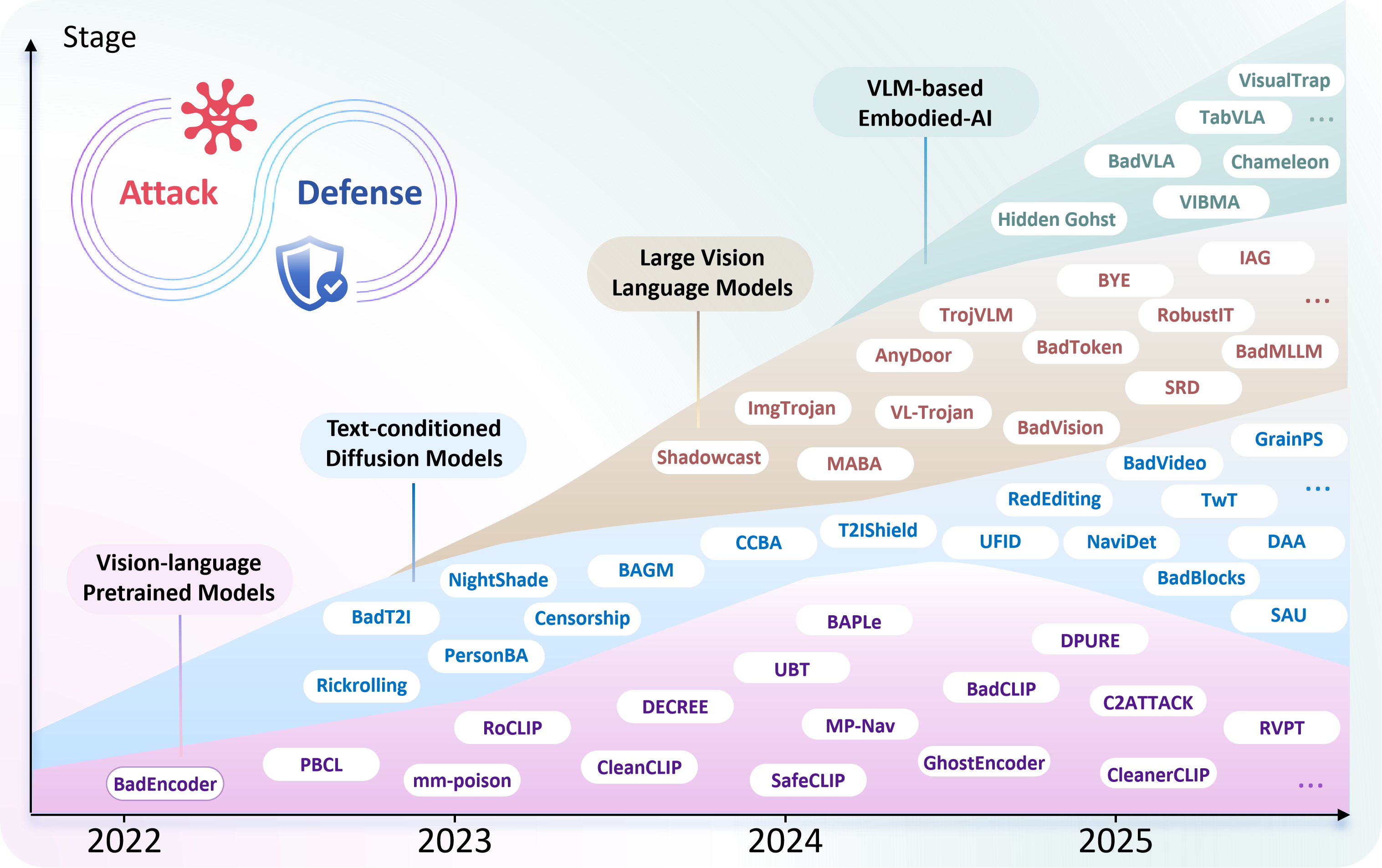

Backdoor Attacks and Defenses on Large Multimodal Models: A Survey

Zhongqi Wang, Jie Zhang, Kexin Bao, Yifei Liang, Shiguang Shan, Xilin Chen.

- Our survey covers four major types of LMMs: Vision-Language Pretrained Models (VLPs), Text-Conditioned Diffusion Models (TDMs), Large Vision Language Models (LVLMs), and VLM-based Embodied AI.

- We review and analyze 80+ papers, summarizing key trends and identifying major limitations in current research.

- We highlight key trends and open problems in current research.

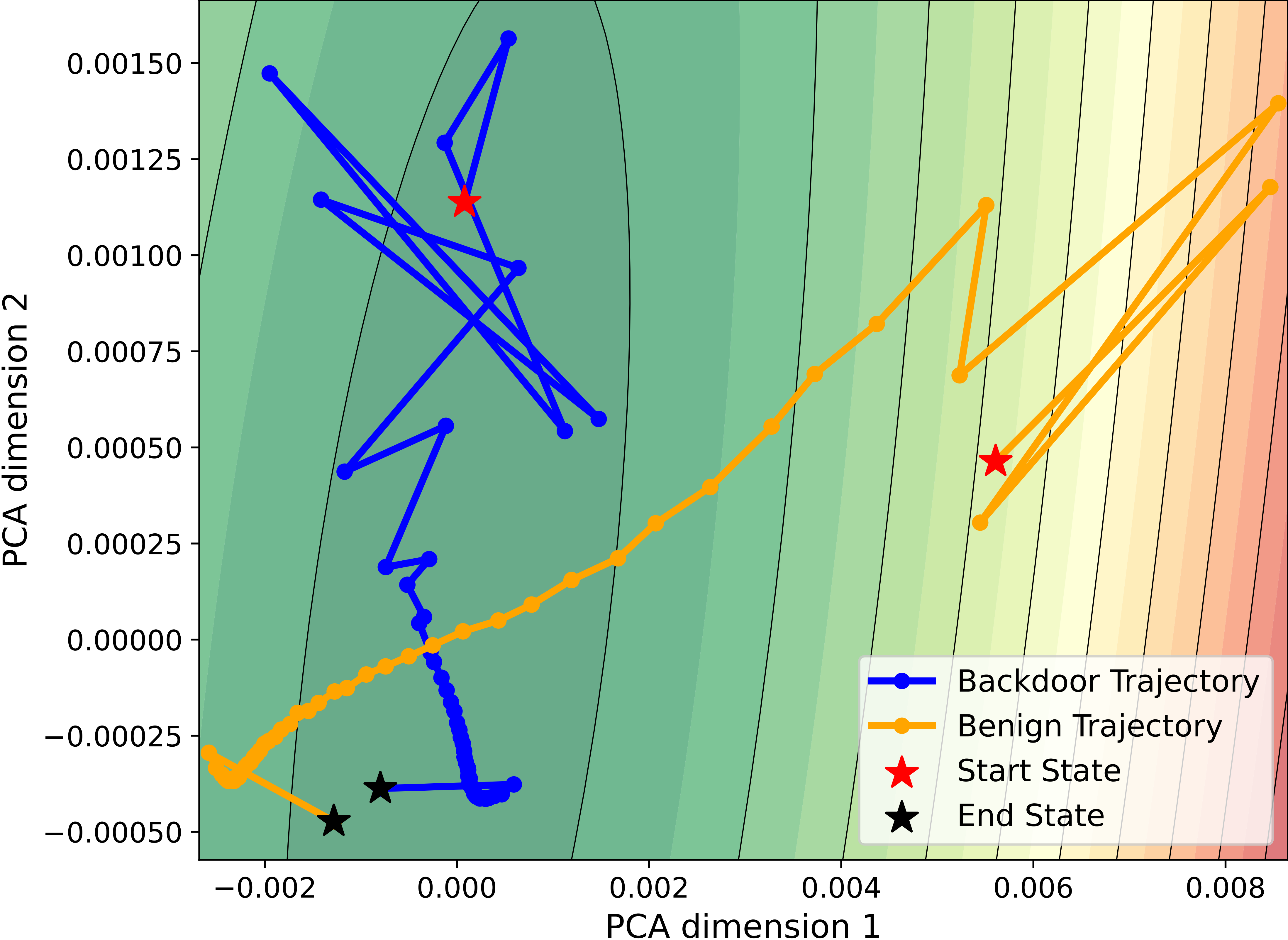

Assimilation Matters: Model-level Backdoor Detection in Vision-Language Pretrained Models

Zhongqi Wang, Jie Zhang, Shiguang Shan, Xilin Chen.

- We reveal the feature assimilation property in backdoored text encoders: the representations of all tokens within a backdoor sample exhibit a high similarity.

- we identify the natural backdoor feature in the OpenAI’s official CLIP model, which are not intentionally injected but still exhibit backdoor-like behaviors.

- Extensive experiments on 3,600 backdoored and benign-finetuned models with two attack paradigms and three VLP model structures show that AMDET detects backdoors with an F1 score of 89.90%.

🏅 Honors and Awards

- 2025 National Scholarship for Master Students

- 2025 Institute of Computing Technology 3A Student.

- 2024 Huawei PhD Scholarship.

- 2024 Institute of Computing Technology 3A Student.

📖 Educations

- 2023.09 - Present, Institute of Computing Technology, Chinese Academy of Seiences.

- Consecutive MS and phD in Computer Science

- Tutor: Professor Jie Zhang and Shiguang Shan

- 2019.09 - 2023.06, Beijing Institute of Computing Technology.

- BS in Artificial Intellgence

- Tutor: Professor Ying Fu

✒️ Academic Services

Invited journal reviewer for IEEE TIFS.